How Far Should You Look? The Effects of Reward Sparsity on Resource-Rational Planning

The Breadth-Depth Dilemma

Decision-making under resource constraints is a fundamental cognitive challenge. Living beings must balance the benefits of extensive planning against the costs of mentally simulating potential outcomes.

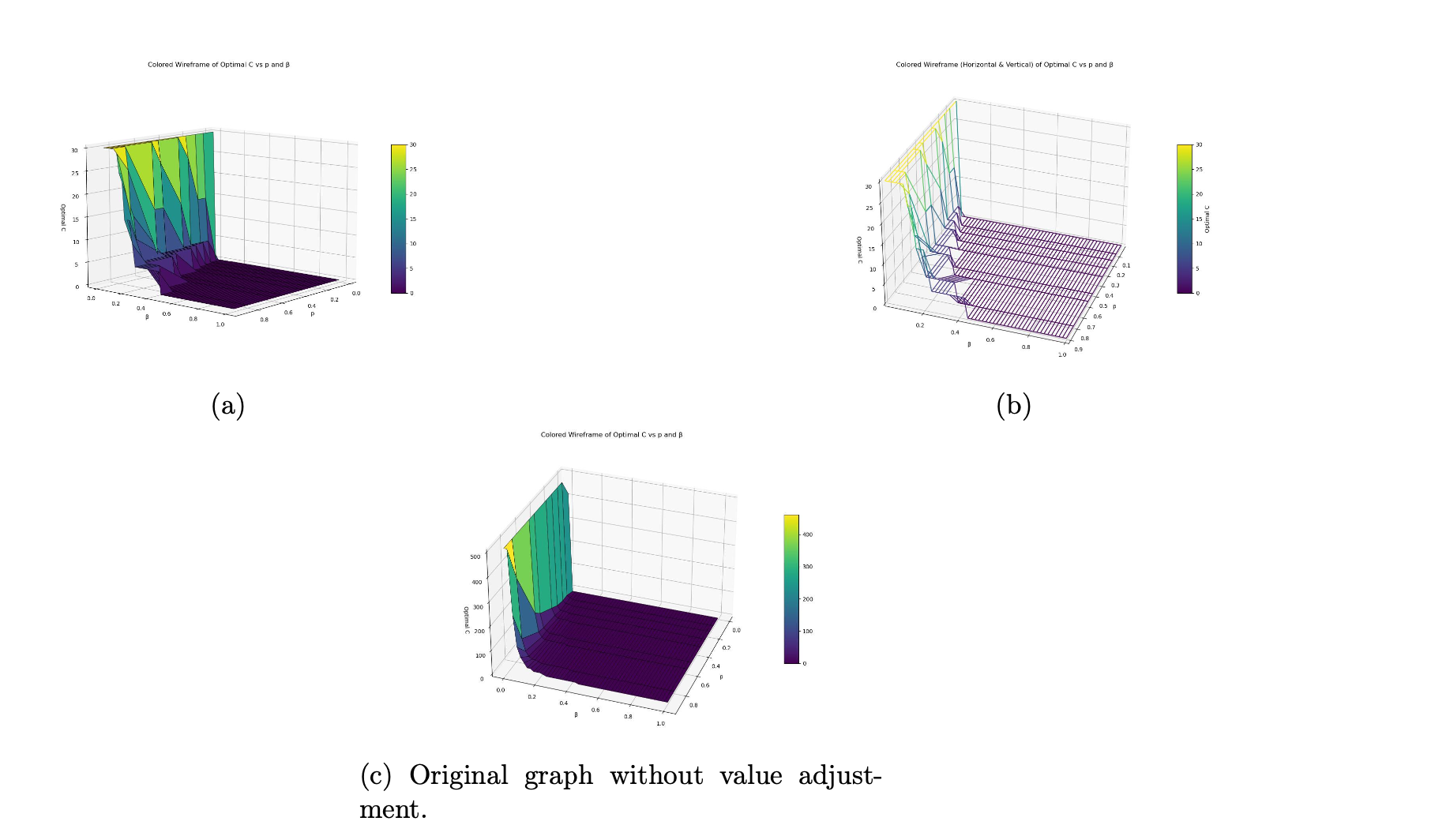

In this project, we investigated how reward sparsity (how rare good options are) influences optimal planning strategies. We adopted a two-phase framework where agents first allocate a limited sampling capacity to “imagine” decision paths, and then commit to a route.

The Impact of Thinking Costs

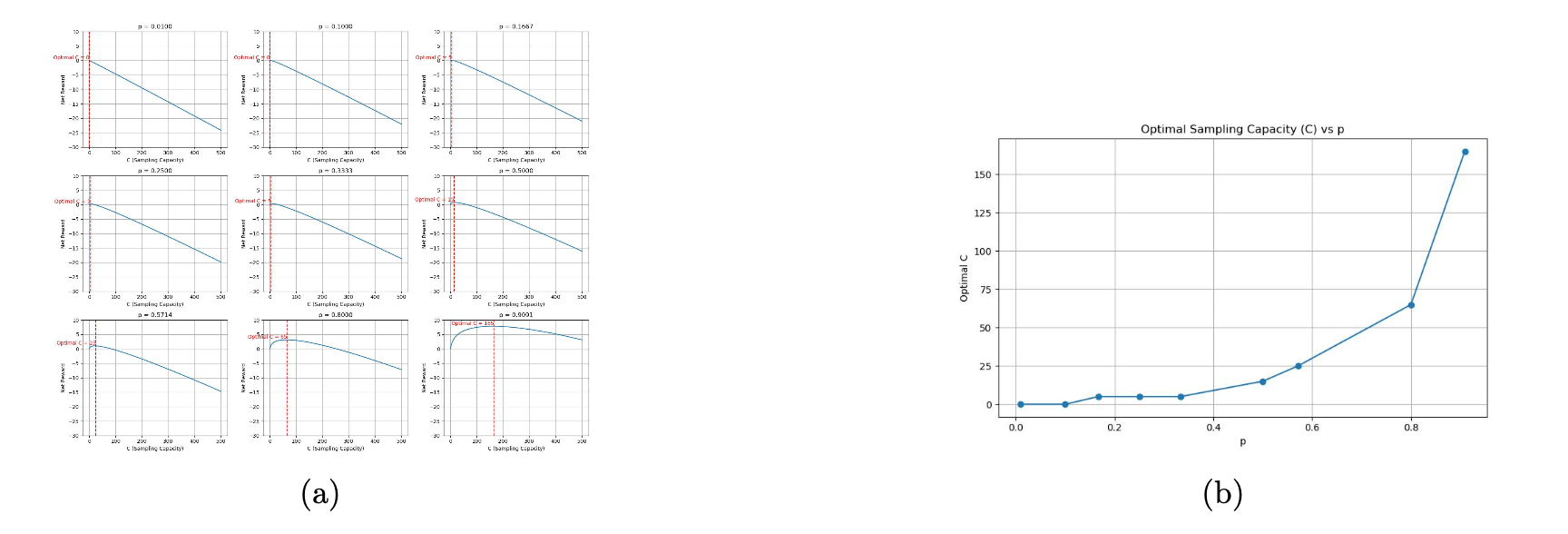

We introduced a linear cost for every “mental sample” the agent takes. Our model reveals that the optimal amount of planning is highly sensitive to the cost of information.

Key Findings

- The “Giving Up” Threshold: In environments where positive rewards are extremely sparse ($p \le 0.1$), agents rationally choose not to plan at all when sampling costs exceed a certain threshold ($C^* \approx 0$).

- Bounded Rationality: Even in “rich” environments where rewards are abundant, the optimal amount of planning remains surprisingly modest.The agent finds a “golden spot” where the marginal gain of one more mental simulation equals the cost, often stopping far short of exhaustive exploration.

A Phase Transition in Strategy

We observed a distinct “tipping point” in optimal behavior. Below a certain level of environmental richness, the agent relies on pure exploitation (guessing). Once the environment becomes sufficiently rich, the agent rapidly ramps up exploratory planning.

Implications

These results suggest that “deep” extensive planning is often suboptimal in realistic scenarios where thinking is costly. Instead, the brain likely utilizes a context-sensitive planning schedule: minimizing cognitive effort in barren environments and selectively increasing depth only when the environment promises a high return on investment.

- Work: Master’s Thesis, University of Pennsylvania.