Slow and Steady: Auditory Features for Discriminating Animal Vocalizations

Overview

The Question How do animals effectively discriminate and parse vocalizations—such as bird songs, human speech, or macaque coos—despite substantial variation between individuals and species?

The Hypothesis We proposed that vocalizations share an underlying “temporal regularity”: spectro-temporal correlations that change smoothly over time. We hypothesized that the auditory system exploits these “slow” features to identify sounds robustly.

The Method We applied Slow Feature Analysis (SFA), an unsupervised learning algorithm, to vocalization datasets from three distinct groups:

- Birds: Blue jay, house finch, American yellow warbler, great blue heron

- Humans: English speakers pronouncing digits

- Macaques: Coo vocalizations

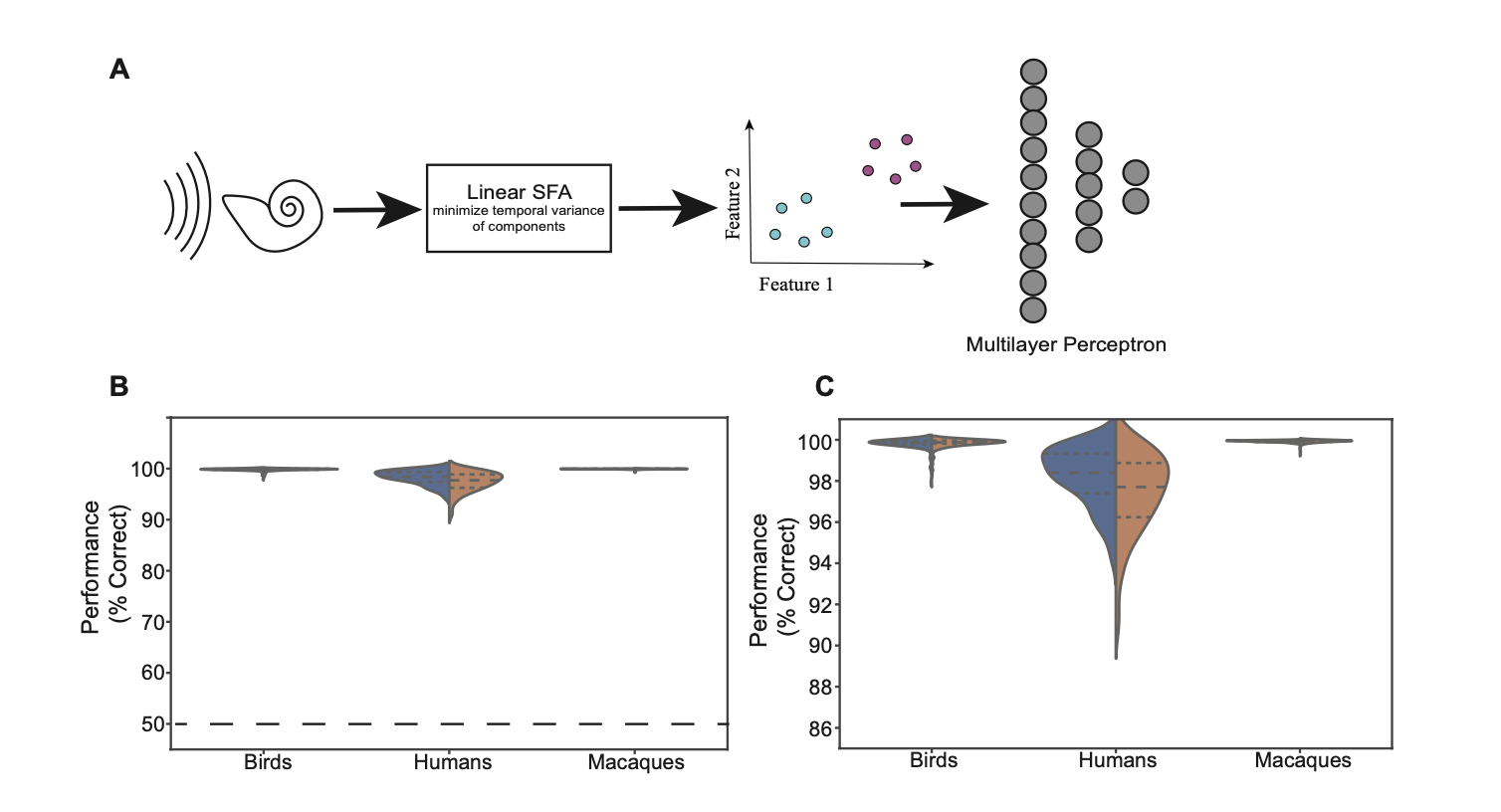

We projected these sounds into a feature space optimized for “slowness” and tested discrimination accuracy using a simple feed-forward neural network (Multilayer Perceptron).

Key Findings

1. High Discrimination Performance

Our model achieved >95% classification accuracy across all animal groups (inter-class and intra-class), confirming that temporal regularity is sufficient for robust vocalization discrimination.

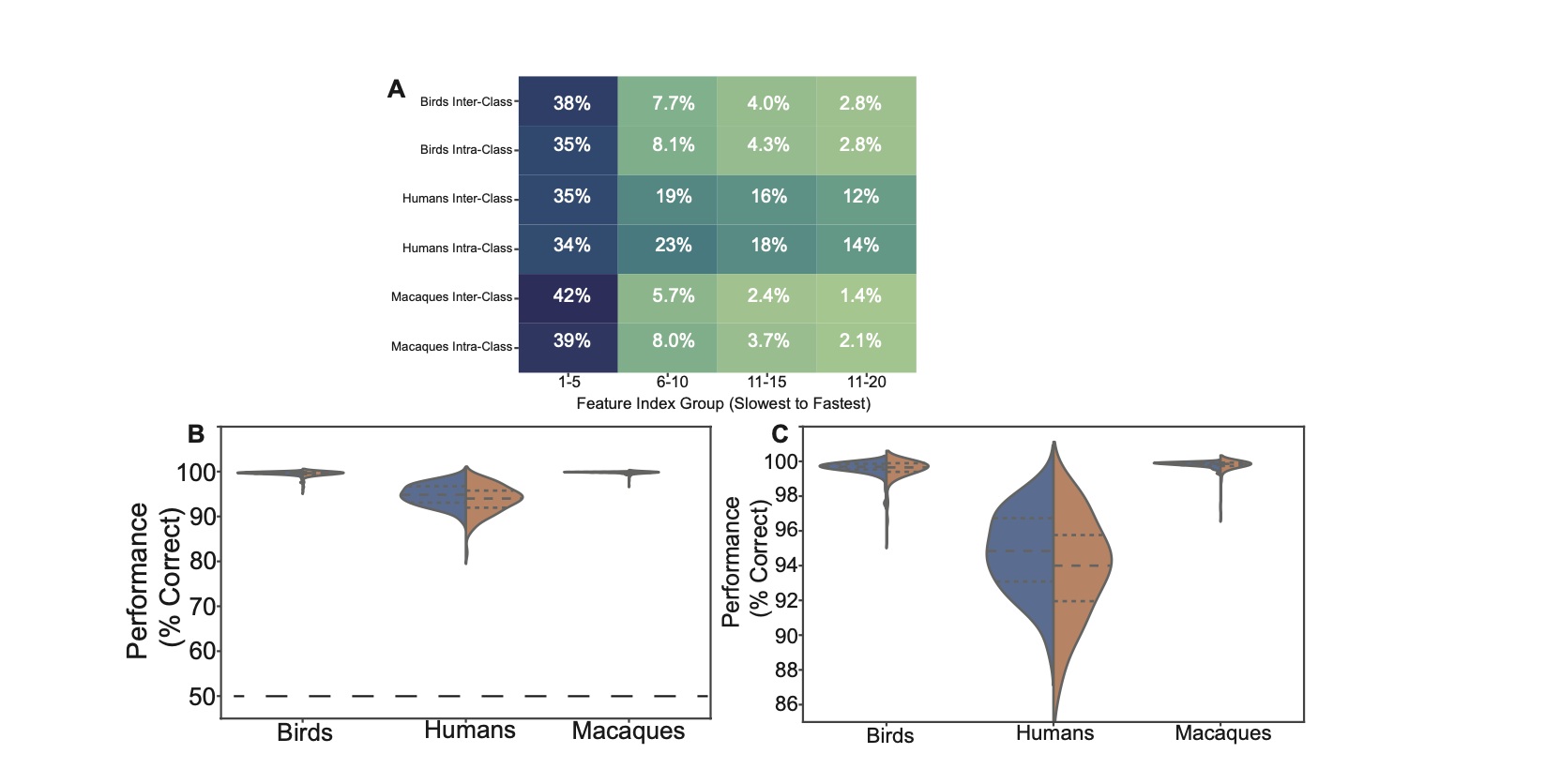

2. The “Slowest” Features Matter Most

When we systematically shuffled features to disrupt their information, we found that performance depended primarily on the top ~10 slowest features. This suggests that biological auditory systems may be optimized to track these specific, slowly varying components of sound.

3. Slowness Equals Predictability

We compared our results with Predictable Feature Analysis (PFA) and found that the “slow” features found by SFA are highly correlated with the most “predictable” features of the sound. This links two major computational theories of auditory perception: that the brain looks for continuity (slowness) and predictability.

Future Directions

These results suggest that auditory circuits might be specifically adapted to extract temporally continuous modulations. Future work will investigate how neural circuits in the auditory pathway might implement these SFA-like computations.

Work

Accepted by COSYNE 2023, full paper on arXiv